ActiveMQ, prefetch limits, the Dispatch Queue and transactions

The objective of this article is to describe the interaction of ActiveMQ and its consumers, and how message delivery is affected by the use of transactions, acknowledgement modes, and the prefetch setting. This information is particularly useful if you have messages that could potentially take a long time to process.

The objective of this article is to describe the interaction of ActiveMQ and its consumers, and how message delivery is affected by the use of transactions, acknowledgement modes, and the prefetch setting. This information is particularly useful if you have messages that could potentially take a long time to process.

The Dispatch Queue

The Dispatch Queue contains a set of messages that ActiveMQ has destined to be sent to a particular consumer. These messages are not available to be sent to any other consumers, unless their target consumer runs into an error (such as being disconnected). These messages are streamed to the consumer, to allow faster processing. This is also referred to as “pushing” messages to the consumer. This is in contrast to consumer polling or “pulling” messages when it’s available to process a new one.

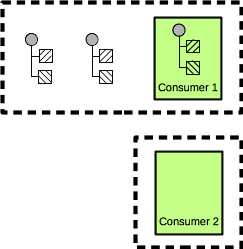

The prefetch limit is defined by the ActiveMQ documentation as “how many messages can be streamed to a consumer at any point in time. Once the prefetch limit is reached, no more messages are dispatched to the consumer until the consumer starts sending back acknowledgements of messages (to indicate that the message has been processed)”. Basically, the prefetch limit defines the maximum number of messages to assign to the dispatch queue of a consumer. This can be seen in the following diagram (the dispatch queue of each consumer is depicted by the dotted line):

Dispatch queue with a prefetch limit of 5 and transactions enabled in the consumer

Streaming multiple messages to a client is a very significant performance boost, specially when messages can be processed quickly. Therefore the defaults are quite high:

- persistent queues (default value: 1000)

- non-persistent queues (default value: 1000)

- persistent topics (default value: 100)

- non-persistent topics (default value:

Short.MAX_VALUE -1)

The prefetch values can be configured at the connection level, with the value reflected in all consumers using that connection. The value can be overridden in a per consumer basis. Configuration details can be found in the ActiveMQ documentation.

Normally messages are distributed somewhat evenly, but by default ActiveMQ doesn’t guarantee balanced loads between the consumers (you can plug in your own DispatchQueue policy, but in most cases that would overkill). Some cases in which the messages are unevenly distributed might be caused by:

- A new consumer connects after all of the available messages have already been committed to the Dispatch Queue of the consumers already connected.

- Consumers that have different priorities.

- If the number of messages is small, they might all be assigned to a single consumer.

Tuning these numbers is normally not necessary, but if messages take (or could potentially take) a significant long time to process, it might be worth the effort to tune. For example, you might want to ensure a more even balancing of message processing across multiple consumers, to allow processing in parallel. While the competing consumer pattern is very common, the ActiveMQ’s Dispatch Queue could get in your way. Particularly, one of the consumers can have all of the pending messages (up to the prefetch limit) assigned to its Dispatch Queue. This would leave other consumers idle. Such a case can be seen below:

Dispatch queue with a prefetch limit of 5 and transactions enabled in the consumer

This is normally not a big issue if messages are processed quickly. However, if the processing time of a message is significant, tweaking the prefetch limit is an option to get better performance.

Queues with low message volume and high processing time

While it’s a best practice to ensure your consumer can process messages very quickly, that’s not always possible. Sometimes you have to call a third party system that might be unreliable, or the business logic just keeps growing without much thought about the real world implications.

For consumers with very long processing times, or very variable processing time, it is recommended to reduce the prefetch queue. A low prefetch limit prevents messages from “backing up” in the dispatch queue, earmarked for a consumer that is busy:

Dispatch queue with a prefetch limit of 5 and transactions enabled in the consumer

This behavior can be seen in the ActiveMQ console with a symptom most people describe as “stuck” messages, even though some of the consumers are idle. If this is the case, it’s worth examining the consumers:

The “Active Consumers” view can help shed light into what is actually happening:

Screenshot showing a consumer with 5 messages in its Dispatch Queue. The prefetch limit is set at 5 for this consumer.

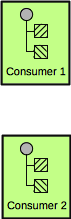

To address the negative effects of such cases, a prefetch limit of 1 will ensure maximum usage of all available consumers:

Dispatch queue with a prefetch limit of 1 and transactions enabled in the consumer

This will negate some of the efficiencies of streaming a large number of messages to a consumer, but this is negligible in cases where processing each message takes a long time.

The Dispatch Queue and transactions (or lack thereof)

When the consumer is set to use Session.AUTO_ACKNOWLEDGE, the consumer will automatically acknowledge a message as soon as it receives it, and then it will start actually processing the message. In this scenario, ActiveMQ has no idea if the consumer is consumer is busy processing a message or not, and will therefore not take that message into account for the dispatch queue delivery. Therefore, it’s possible for a SECOND message to be queued for a busy consumer, even if there is another consumer idle:

Dispatch queue with a prefetch limit of 1 and auto acknowledge enabled

If Consumer 1 takes a long time processing its message, the second message could will take a long time to even start being process. This could have significant impact on the performance issues. Normal troubleshooting might a few discrepancies

- We have messages waiting to be picked up in ActiveMQ

- We have idle consumers

How can we this situation be prevented? For such cases, one option is to disable the dispatch queue altogether, by setting the prefetch limit to zero. This cause force consumers to have to fetch a message every time they’re idle, instead of waiting for messages to be pushed to them. This will further degrade performance of the JMS delivery, so it should be used will care. However this will ensure that all available consumer are kept busy:

Consumer with no dispatch queue (prefetch limit set to zero)

Final thoughts and considerations

While the prefetch limit default is good enough for most applications, a good understanding of what happening under the covers can go a long way in tuning a system.

Comments